The development of mind-reading technologies raises concerns about potential discrimination and bias, according to the Information Commissioner’s Office (ICO) in the UK.

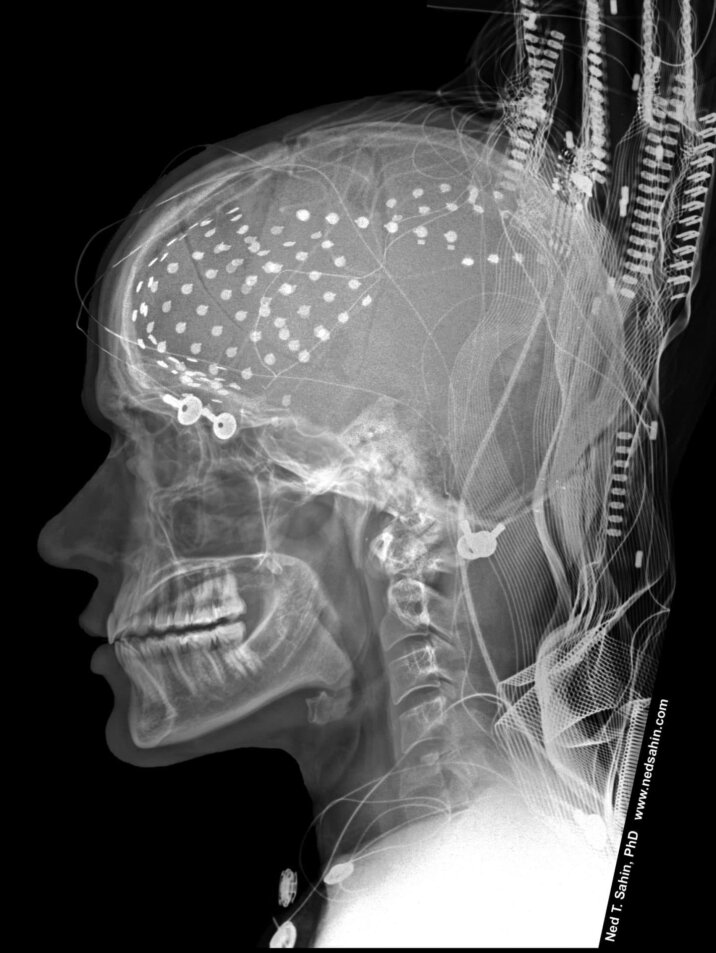

The ICO is developing specific guidance for companies operating in the field of neurodata, as technology that monitors information from the brain and nervous system is expected to become widespread in the next decade. While these technologies have potential applications in areas such as personal wellbeing, sport, marketing, and workplace monitoring, the ICO warns that their adoption without the inclusion of neurodivergent individuals could lead to inaccurate assumptions and discrimination based on faulty conclusions. Additionally, there is a risk of bias against those with distinctive neurological readings that may be considered undesirable in certain contexts, such as the workplace.

The ICO emphasizes that neurotechnology collects highly personal information, including emotions and complex behaviors, which individuals may not be fully aware of. If these technologies are developed or deployed inappropriately, the consequences could be severe. The ICO calls on organizations to act now to ensure that everyone in society can benefit from neurotechnology and to prevent the potential dangers of discrimination.

In the short term, neurotechnology is expected to have the most significant impact in medical and related sectors. However, within four to five years, its use could become more widespread. The ICO anticipates the emergence of neurodata-led gaming in the medium term, where games would allow players to remotely control drones through non-invasive brain monitoring. By the end of the decade, the integration of neuroscience into daily life is expected to increase, with scenarios such as children using wearable brain monitors for personalized education and marketers using brainscans to study emotional responses to advertising and products.

While current data protection rules, such as the GDPR, would likely cover these practices as they involve processing special category data, they also raise new risks, such as neurodiscrimination. The ICO warns that new forms of discrimination could arise that are not currently addressed by existing legislation, potentially rooted in systemic bias and capable of providing inaccurate and discriminatory information about individuals and communities.

The Internet Patrol is completely free, and reader-supported. Your tips via CashApp, Venmo, or Paypal are appreciated! Receipts will come from ISIPP.

The approval of Neuralink, Elon Musk’s brain implant company, for human testing further highlights the growing interest in and development of these technologies. Musk envisions a future where brain implants are a mainstream technology accessible to the general population, even suggesting that they could act as a backup drive for an individual’s digital soul. As these technologies progress, it becomes increasingly important to address the potential ethical, legal, and social implications surrounding their use to ensure fairness, inclusion, and protection against discrimination.

The Internet Patrol is completely free, and reader-supported. Your tips via CashApp, Venmo, or Paypal are appreciated! Receipts will come from ISIPP.